AI - The AI Supply Chain - Part 1 - The Data Center

- brencronin

- 2 days ago

- 7 min read

Updated: 19 hours ago

The Foundation of AI: From Data Centers to Intelligence

Artificial Intelligence (AI) is no longer a futuristic concept, it’s here, and it’s transforming how we live, work, and defend our digital environments. At its core, AI is the result of several mature technologies converging: high-performance computing, massive data availability, and advanced algorithms.

Trying to grasp the full scope of AI can feel overwhelming. That’s why this article series will break down the AI supply chain, exploring each layer of the ecosystem that powers intelligent systems, from the ground up.

Let’s start at the foundation: The Data Center.

While Hollywood portrays AI as a single chip embedded in a robot (like the Terminator’s processor), the real power of AI lies in massive compute infrastructure, hundreds or thousands of specialized chips working in parallel across globally distributed data centers. This infrastructure forms the brainpower behind everything from large language models and other AI capabilities.

“The massive datacenters that make all this possible are being built by construction firms, steel and other manufacturers, and innovative advances in electricity and liquid cooling, all reliant on large numbers of skilled electricians and pipefitters, including members of organized labor unions. Together, all these groups have enabled the technology sector to become an economic backbone for the United States and the world". -Brad Smith (Microsoft) ‘A vision for technology success during the next four years’

While data centers have long served as hubs for organizational infrastructure and cloud services, the rise of AI has triggered a massive global construction boom. Organizations are racing to build next-generation data centers that can meet the soaring demand for AI processing capacity, especially for workloads involving large-scale model training and inference.

To explore where these AI-ready facilities are emerging, the site datacentermap.com provides a comprehensive global index of operational and planned data centers.

Understanding Data Center Sizing for AI

Modern data centers are often described in terms of their power capacity, measured in megawatts (MW). This metric helps quantify the scale of compute infrastructure they can support. For example:

A single server filled rack often is rated to consume 10,000 KW of power. The diagram below displays a top view of 10 racks in a row. These 10 racks each rated at 10,000 KW would consume 100,000 KW of power.

If you then took 10 of these rows you would have 100,000 KW * 10 = 1MW

A 50 MW data center could be thought of as housing the equivalent of fifty 1 MW compute blocks. While “1 MW pods” help visualize scaling, real data center layouts vary, one large room may support many megawatts, provided it has enough space, cooling, and infrastructure to accommodate the necessary racks.

Note: These figures are illustrative. Actual configurations may vary based on cooling, spacing, and power distribution. The key concept is that as AI demand grows, so does the power and footprint of the data centers supporting it.

AI workloads, particularly those involving model training, require tremendous compute power, often supported by specialized infrastructure like GPU clusters. This is driving a global boom in data center construction.

Hyperscalers & Hyperscale Data Centers

In the data center industry, hyperscale data centers refer to facilities specifically designed to support large-scale, high-performance workloads. While there’s no strict threshold, hyperscale data centers typically feature:

Massive capacity - often exceeding 5,000 servers and 10,000 square feet

High-speed networking - 40 Gbps or faster connectivity

Optimized infrastructure - engineered for efficiency, scalability, and low latency

Rather than a single building, many hyperscale deployments consist of multi-building campuses, allowing operators to scale capacity over time. For example, the OpenAI Stargate campus in Abilene, Texas is projected to grow from an initial 350 MW to a massive

1.2 GW, more than tripling its original scale.

Who Are the Hyperscalers?

The term "hyperscaler" typically refers to companies operating at the highest tiers of cloud and compute infrastructure. The primary players are:

Microsoft

Amazon

Google

Meta

These companies operate global networks of hyperscale data centers to support public cloud, AI, and platform services.

Other Hyperscale Builders

You don’t have to be a cloud giant to build at hyperscale. Many companies specialize in constructing and operating large-scale data center campuses, including:

Aligned Data Centers

Ascenty Data Centers

CloudHQ Data Centers

CyrusOne Data Centers

Digital Realty Data Centers

EdgeConneX Data Centers

Equinix Data Centers

QTS Data Centers

Stream Data Centers

Vantage Data Centers

(...and dozens of others)

These companies often partner with hyperscalers or major enterprises to build custom environments that meet demanding workload needs.

Note: Building hyperscale facilities is as much a construction and logistics challenge as a technology endeavor. As such, many of these operators function more like infrastructure construction firms than traditional tech companies.

The Business Model Behind Large Data Centers

The business of building large-scale data centers is driven primarily by the demands of hyperscalers and major cloud providers. While these companies are technology-focused, the actual construction and operation of data centers is a massive infrastructure and real estate undertaking, often handled by specialized data center providers.

Leasing vs. Owning

Although hyperscalers like Microsoft, Amazon, and Google do build their own facilities, they more frequently lease capacity from data center operators. This model allows them to scale quickly without tying up capital in construction projects.

Revenue Model for Data Center Operators

Here’s a simplified example of how the economics work:

The market rate for leasing data center power is roughly $175–$225 per kilowatt (kW) per month.

At $200/kW/month, a 50 megawatt (MW) data center (50,000 kW) would generate:

$200 × 50,000 kW = $10,000,000 per month

Annual revenue: $120 million

These predictable, long-term lease agreements are highly attractive to investors.

Capital Investment and ROI

To build a 50 MW facility, the cost can range from $300 million to $500 million, depending on land, location, cooling systems, and power infrastructure. Once operational, leasing that capacity at standard rates could yield $120M–$150M annually, making it a lucrative long-term asset for infrastructure investors.

Importantly, the data center provider only supplies the physical space, power, cooling, and connectivity. The customers install and operate their own IT hardware—racks, servers, and network gear.

This separation of responsibilities allows technology firms to stay focused on their core business while outsourcing the complex, capital-intensive job of building and managing the physical facility.

Data Center Locations and Hotspots

While data center maps often show clusters near major metropolitan areas, the full story is more nuanced. True data center hotspots emerge where three key factors align:

Abundant high-speed fiber-optic connectivity

Lower energy and real estate costs

Presence of anchor hyperscaler facilities

These hotspots become strategic zones because of one core principle: proximity matters. Even with high-speed fiber, data latency increases with distance. In the cloud ecosystem, where services constantly exchange data across platforms and providers, being close physically reduces lag and improves performance.

One prime example is “Data Center Alley” in Northern Virginia, just west of Washington D.C. This region hosts one of the highest concentrations of data centers in the world, with dozens of large data centers all within a radius of a few miles.

Unexpected Data Center Hotspots

While it’s no surprise to find data center growth in areas like Dallas, Texas, close to major metropolitan hubs, other locations might seem less intuitive at first glance. Phoenix, Arizona and parts of Ohio, for example, have emerged as major data center hotspots.

At first, you might wonder: Why would anyone build massive data centers in the scorching heat of Phoenix? The answer lies in the trade-offs. Despite the high temperatures, Phoenix offers lower costs, fewer regulatory hurdles, and reduced natural disaster risks compared to places like California. For data center operators, managing heat is a solvable problem, especially when it means benefiting from more favorable business conditions.

The Trillion-Dollar AI Arms Race

Governments and private companies alike are pouring billions, and soon trillions, of dollars into the AI arms race. At the heart of this surge is the construction of massive data centers and the high-performance computing hardware that powers them.

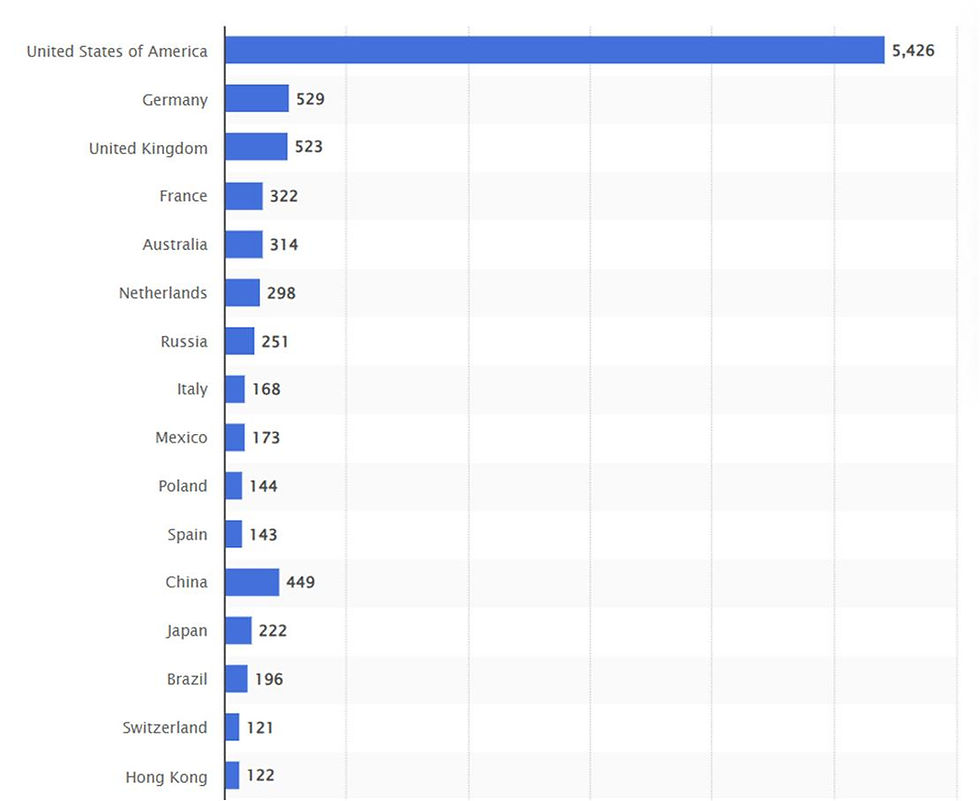

Currently, the United States leads the world in overall data center capacity, but the gap is narrower than raw numbers might suggest. While the U.S. boasts approximately 20,000 data centers compared to China's 500, this doesn’t mean the U.S. has 40 times more data center computing capacity.

In terms of energy capacity, a better indicator of actual compute scale, the U.S. operates around 20,000 megawatts (MW) of data center power, while China has an estimated 5,000 MW. That’s a 4:1 capacity ratio, not 40:1. The discrepancy is largely due to older, less efficient infrastructure still in use across many U.S. facilities.

Strategic Lag in AI Capacity Development

One of the key challenges in scaling AI capacity is the long lead time required to build data centers and manufacture advanced computing power that goes in the data centers. Because of this, much of the recent data center boom was driven by anticipatory demand, building capacity before it’s urgently needed, to stay ahead of future AI growth.

As of early 2025, there’s been a noticeable slowdown in new leases and construction starts by hyperscalers. However, this isn’t a sign of decline, but rather a strategic shift. Instead of rapid expansion across the board, companies are now focusing on targeted growth in regions that offer better economics, infrastructure, or strategic value.

AI compute demand will continue to evolve, so will the urgency and scale of the infrastructure needed to support it. Ultimately, this isn’t just a technological competition. It’s a global economic and geopolitical race, with long-term implications for innovation leadership and national competitiveness.

Power: The Lifeblood of the Data Center

As we continue to explore the AI supply chain, it's essential to understand that none of it operates without power. In fact, power is the lifeblood of every data center, fueling everything from high-density server racks to cooling systems and network operations.

Modern data centers supporting AI workloads are massive power consumers, and that demand is only accelerating. Training large language models or running inference at scale requires tens of megawatts per facility, often supported by dedicated substations and complex energy infrastructure.

To put it into perspective:

A typical hyperscale data center may require 50 to 100 MW or more.

That’s enough to power tens of thousands of homes.

Some AI-focused campuses are projected to reach over 1 gigawatt (1,000 MW) of total power capacity.

This growing demand is reshaping power strategy at the global level:

Data center operators are forming long-term energy contracts with utility providers.

Some are investing in on-site energy generation, including solar, wind, and even nuclear microgrids.

Others are optimizing for grid interconnection, redundancy, and energy efficiency to meet environmental and regulatory goals.

As AI adoption expands, power availability and reliability will be among the most critical constraints, and enablers, for future innovation.

In the next section, we’ll dive deeper into how energy strategy, sustainability, and infrastructure scalability are influencing the next generation of AI data centers and a crucial component of the AI supply chain.

References

A vision for technology success during the next four years:

Data Center Maps:

Data Center Maps:

Stargate Data Center:

History of Northern Virginia data center alley:

Data Center build out slow down:

Comments